The popular Silicon Valley mantra Fail Fast, Fail Often is often misunderstood. The idea is not to repeatedly fail until one gets it right. In fact it alludes to a very specific kind of failure, one that is fast and cheap and reveals something new and interesting. This is the right kind of failure Silicon Valley is obsessed with. In her book The Right Kind of Wrong, Amy Edmondson, a professor at Harvard Business School, talks about the differences between good failures and bad failures. This is the part one of a two part series on lessons from this book. This post is about the different kinds of failures and why one is better than the other. In the next post, I will talk about how to create a culture of psychological safety that encourages good failures.

Basic failures

Basic failures are common everyday errors that happen due to oversight, neglect, inattention, forgetfulness, faulty assumptions based on poor logic or scant evidence and distraction that comes with modern life. Basic failures are unproductive, a drain on energy and resources and are the farthest away from the kind of failure we all want to encourage.

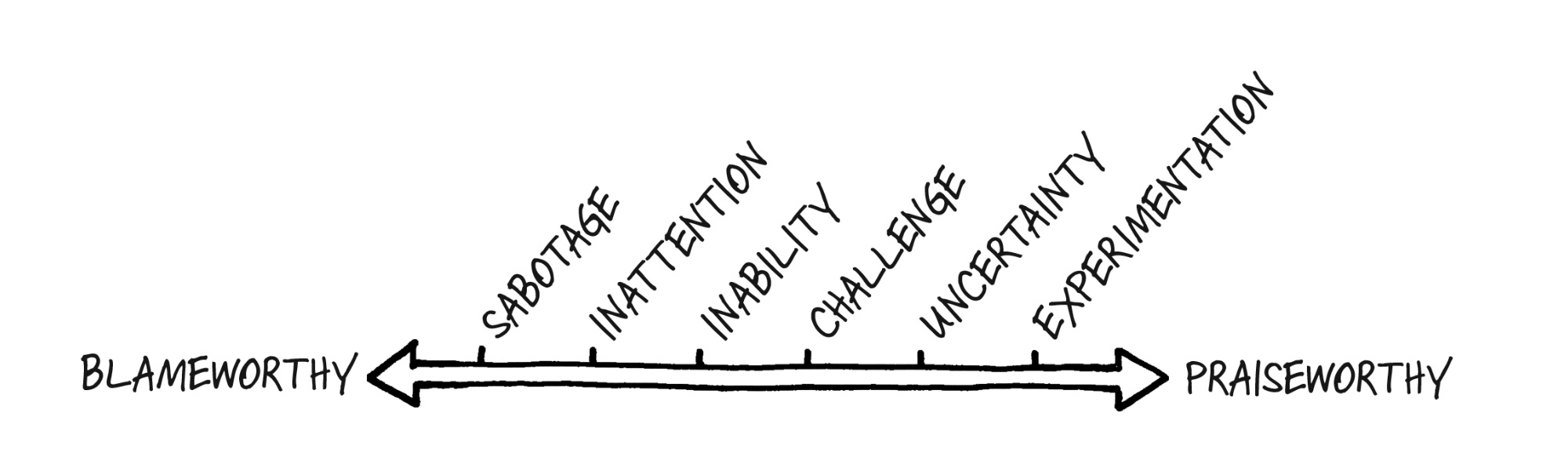

At this juncture it is perhaps important to look at failures in a spectrum. The infograhic below talks about a spectrum of failures. The left side of the spectrum is where basic failures lie and the right side is where intelligent failures lie. The failures on the left side are blameworthy while those on the right side are praiseworthy. Worth mentioning that almost all the failures we encounter in our work or personal life lie somewhere in the middle of the spectrum.

Avoiding these kinds of failures is relatively easy and requires processes and systems. Some ways one can avoid basic failures are stated below.

-

Utilizing Checklists and Standardization: Seasoned professionals, such as pilots, adhere to meticulous checklists and standardized procedures prior to every flight, ensuring the aircraft’s optimal functionality. In Atul Gawande’s Checklist Manifesto, the transformative impact of checklists in the medical field is emphasized, leading to enhanced performance, decreased errors, and lives saved. However, it’s crucial to acknowledge that checklists have their limits.

-

Cultivating a Safety-Centric Culture: Elevating the pursuit of excellence into a habit entails an unwavering commitment to meticulous attention to detail. By bringing safety front and center, one ensures that all possible precautions are taken to avoid basic failures that could lead to accidents or injuries.

-

Fostering a Culture of Blameless Reporting: Encouraging employees to openly communicate adverse events and issues without fear of repercussions is paramount. Establishing your version of the “Andon Cord,” akin to Toyota’s approach, reinforces transparency as non-punitive and incentivizes the free exchange of information regarding errors and their solutions.

-

Proactive Maintenance: Overcoming the challenge of “Temporal Discounting,” which leads us to prioritize immediate concerns over future potential problems, is essential. Regular, routine maintenance exerts a disproportionately significant impact on the mitigation of fundamental failures.

-

Mandatory Training Programs: Ensuring that all personnel possess a comprehensive understanding of equipment, coding, or any relevant subject matter is indispensable. Continuously updating training modules based on past errors ensures a state-of-the-art skill set.

-

Implementing Poka-Yoke Principles: The concept of “poka-yoke,” or error-proofing, involves designing systems to prevent, rectify, or highlight errors as they occur. It’s akin to childproofing your furniture or medicine bottles, effectively eliminating unexpected surprises or blunders.

The reason basic failures are helpful is that they are a good way to practice learning from past mistakes and getting used to the emotional discomfort one feels about failures.

Complex failures

Cumulative small issues can culminate in complex failures, similar to basic failures, and typically occur in familiar environments. Complex failures are influenced by various internal and external factors, such as increasing distractions and the overconfidence that repetition can bring. Interconnectedness, exemplified during the COVID-19 pandemic, also plays a significant role.

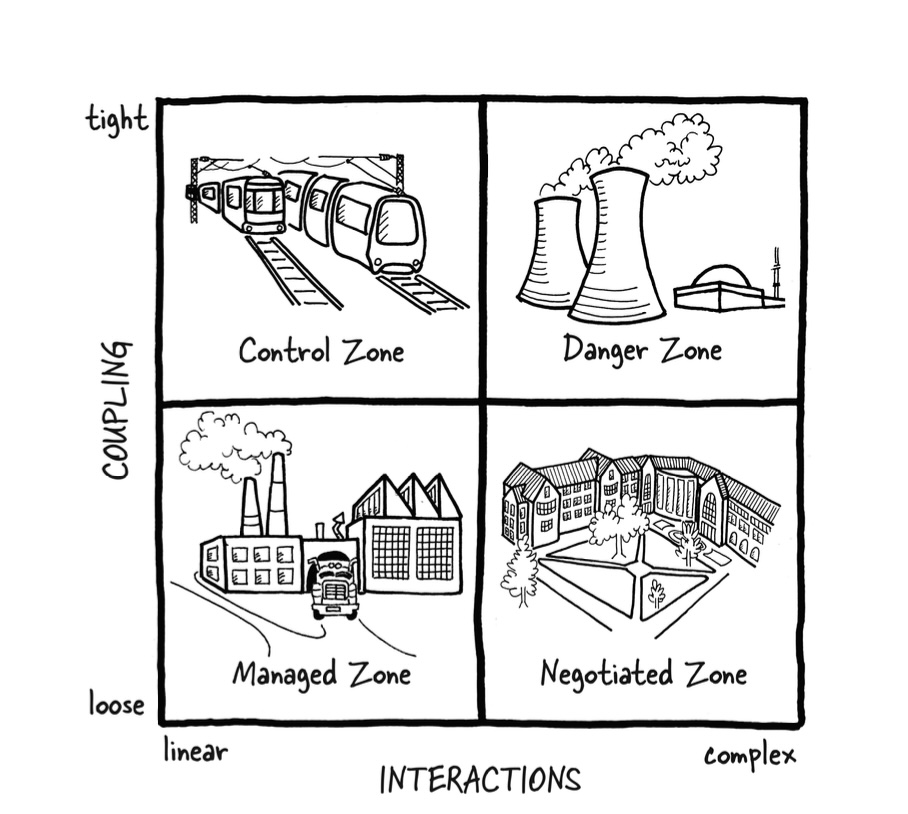

Interactive complexity emerges when multiple systems collaborate, making it challenging to predict the consequences of a minor error or change in one system. Perrow extensively explores this concept in “Normal Accidents.” In today’s world, disasters are more likely to occur when systems are tightly interconnected and their interactions are intricate. The diagram below shows how interactive complexity and tight coupling can lead to complex failures.

A way to understand complex failures is the swiss cheese model. Just as in a swiss cheese there are holes which often line up to create a hole that goes through the entire cheese, similarly in complex failures, multiple small failures line up to create a big failure. Visualising a complex system like a piece of swiss cheese reminds us of the role of chance. Error will always be with us but we can design systems that notice them and stop them.

Much like basic failures, mitigating complex failures necessitates a proactive approach and learning from past mistakes. However, the complexity of the systems involves not downplaying the role of chance and luck.

Intelligent failures

An intelligent failure is the kind that all want to encourage as it sheds light on new and interesting things. Intelligent failures or smart failures happen in a setting where there’s a lot of analytical or scientific work involved. In a typical lab, the failure rates could be as high as 70% and only these kinds of failures should be celebrated. Intelligent failure occurs as a part of what you believe to be a meaningful opportunity towards a valued goal and getting the right answer is not the only valued goal.

How to tell if a failure is intelligent? Here are 5 quick questions that can help you arrive at the answer.

- New territory - do people already know how to get this done some other way?

- Opportunity driven - is there an opportunity worth pursuing and the goal valuable?

- Informed by prior knowledge - has the required homework been done and a thoughtful hypothesis formulated?

- As cheap as possible - has the risk been mitigated through a well designed experiment?

- Bonus - have all the lessons been mined from the failure and the knowledge shared?

One of the key tenets of smart failures is it happens when the team posseses a growth mindset. A growth mindset, defined by Carol Dweck, is the belief that one’s abilities can be developed through dedication and hard work. A fixed mindset, on the other hand, is the belief that one’s abilities are innate and cannot be changed. A growth mindset is characterised by the curiosity to learn what went wrong and a willingness to go through the pain of looking back in the failure to learn. A fixed mindset is characterised by the fear of looking back and the pain of admitting that one was wrong.

I run a startup called Harmonize. We are hiring and if you’re looking for an exciting startup journey, please write to jobs@harmonizehq.com. Apart from this blog, I tweet about startup life and practical wisdom in books.